In the ever-evolving world of software development, developers have gone through many dependency nightmares, inconsistent environments, and deployment woes, which can turn the effort of developers from creative flow into fire drills. But fear not, my fellow programmers! A powerful tool named Docker became a development beast. Imagine you developed an exceptional code and delivered that masterpiece to a client, but when they run the same code, it crashes, but the same code runs perfectly fine on your local system.

It is one of the most powerful things you can learn about. Shipping software in reality or building locally; containerization addresses two historical problems: “It works on my machine” and “This architecture won’t scale“. In future chapters, we will investigate elements connected with cloud computing and development. Therefore take off your mask, grab the keyboard and be prepared to advance your programming skills with Docker!

Building Your Foundation in Computing

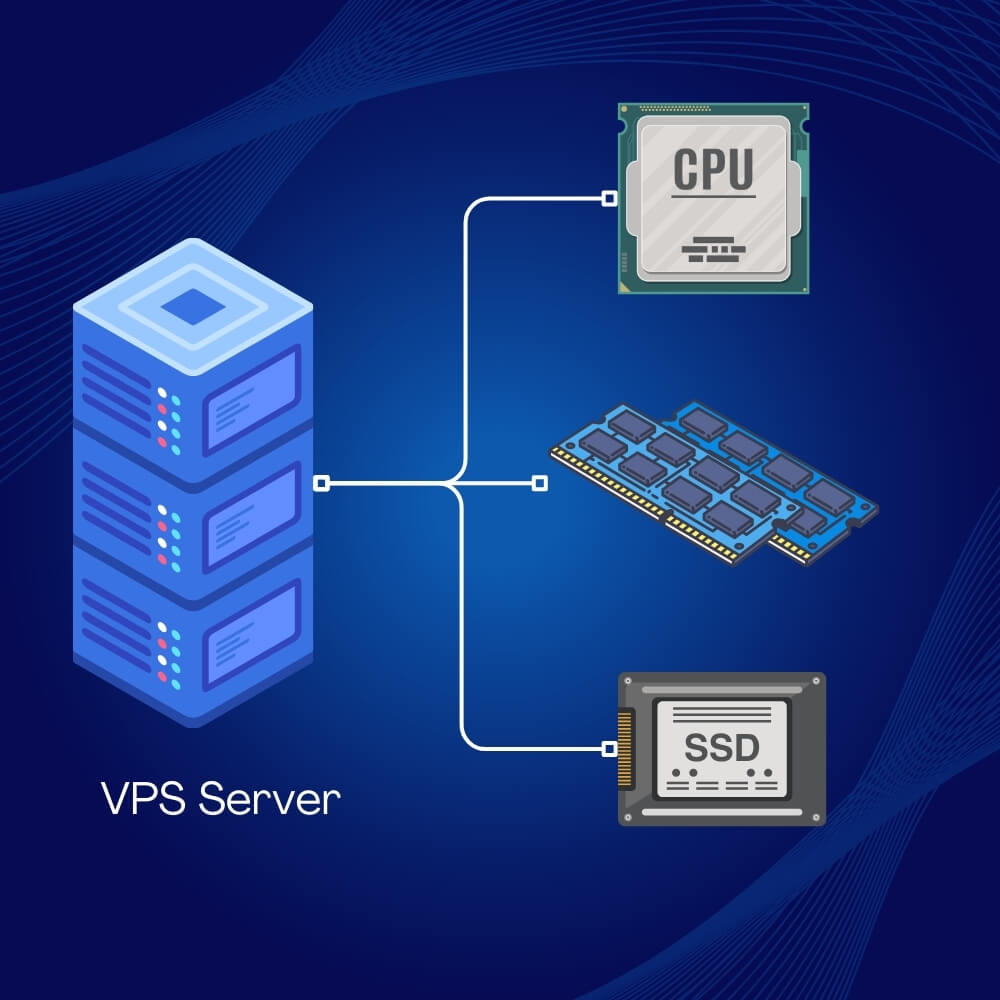

At first, let’s talk about the basic foundation. A computer consists of three important parts: CPU – for calculation, RAM – for running applications and disk – for storing data. So this is what we call hardware at its lowest level. However, in order to work on it we also need an Operating System (OS) which has a kernel that is on top of the hardware thus making it possible for program software applications to operate.

In the past, software was physically installed on machines, but nowadays, most software is delivered over the internet through the magic of networking. When an application starts reaching millions of people, however, strange things can happen. The CPU becomes exhausted handling all the incoming requests, the disk slows down, network bandwidth gets maxed out and the database becomes too large to query effectively. On top of that, you might have written some buggy code that causes race conditions, memory leaks, and unhandled errors, grinding your server to a halt.

Right-Sizing Your Infrastructure: Vertical vs. Horizontal Scaling

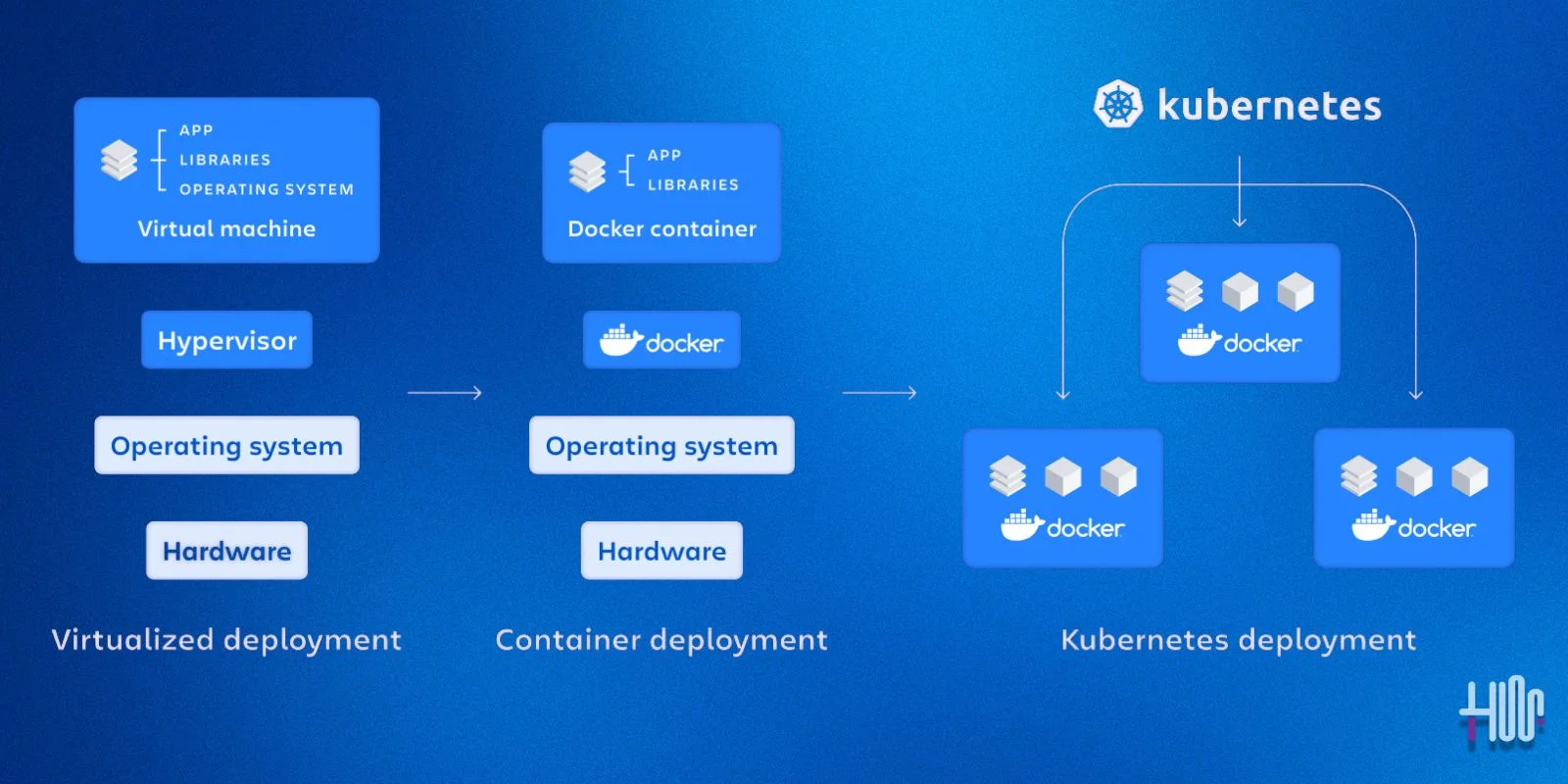

Distributed systems aren’t very practical when talking about bare server (Computer), as actual resource allocation varies. Engineers have addressed this issue with virtual machines (VMs) using tools like hypervisors, which can isolate and run multiple operating systems on a single machine.

You may also like: What is VPS? and How it helps. Complete Guide 2024

The big question is, how do we scale our infrastructure then? There are two main ways to do this, the first is vertically and the second is horizontally. Scaling vertically involves taking your single server and increasing its RAM and CPU. This can take you pretty far, but eventually, you’ll hit a ceiling. The other option is to scale horizontally where you distribute your code to multiple smaller servers, often broken down into microservices that can run and scale independently. However, a VM’s allocation of CPU and memory is still fixed, which is where Docker comes in.

Say Goodbye to Environment Headaches: Introducing Docker

Docker provides OS-level virtualization, allowing applications running on top of the Docker engine to share the same host operating system kernel and use resources dynamically based on their needs. Under the hood, It runs a daemon or persistent process that makes all this magic possible.

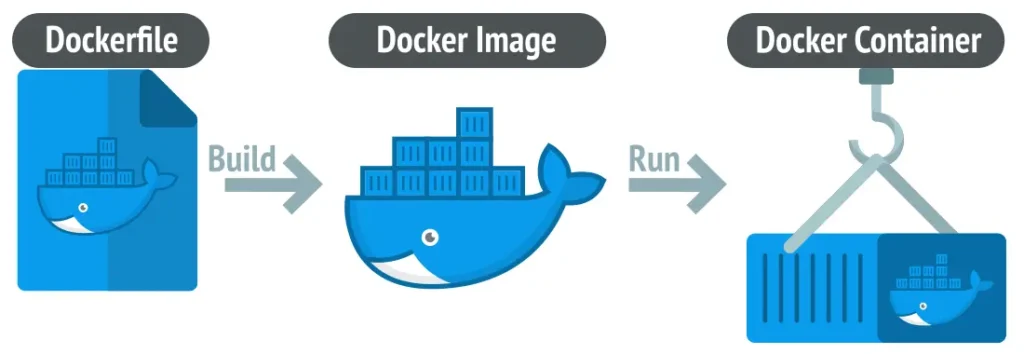

The Beauty of Docker is that you can simply download it from its official website and then Docker will allow you to build software without altering your native machine. Here are the three main steps and components of dockerizing:

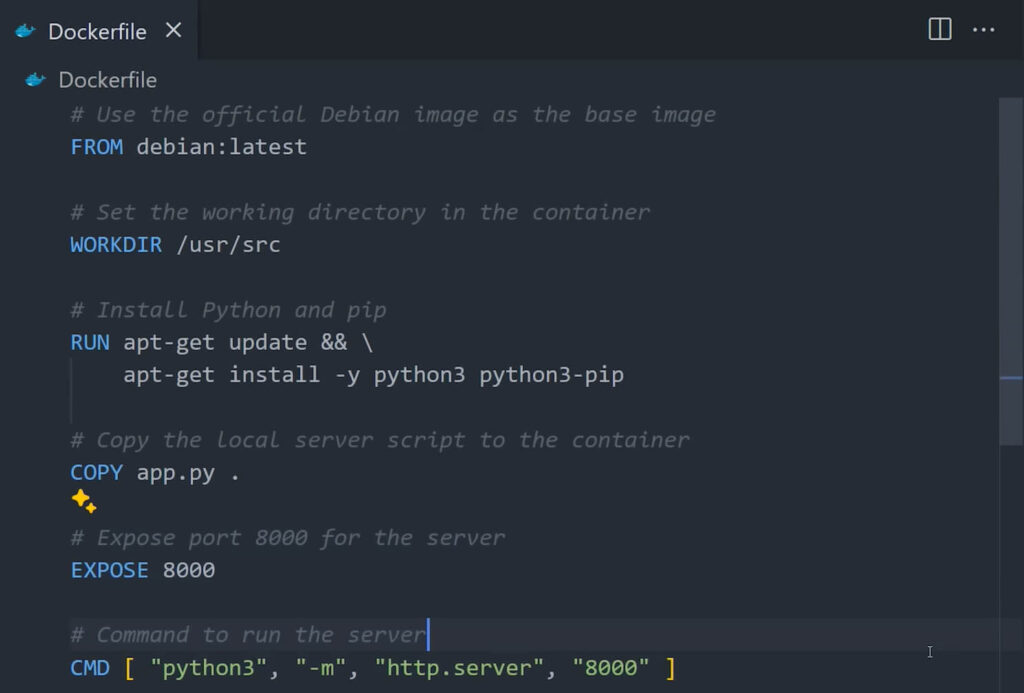

1. Creating the The Docker File

Picture a world where your application always works great no matter what operating system it is using underneath or where it is running. This is what makes Docker so magical! So, what is the secret sauce? Dockerfile.

Consider Dockerfile to be an elaborate instruction manual on how to build a containerized app. It is basically just a text file that consists of series of commands (often in uppercase) which specify what should be done in order to make your program run on Docker. Here’s a breakdown of the key components:

- Base Image: It is the foundation for the container, basically it is a pre-built image from Docker Hub which contains the operating system and essential tools.

- Working Directory: This specifies the location within the container where your application code will reside.

- Dependencies: Dockerfile is also responsible for installing necessary libraries and software packages to ensure that our application has everything that it needs to function.

- Code: You can copy your application code from your local machine into the container during the build process.

- Configurations: Advanced Dockerfiles might include environment variables, port mappings and other settings that are responsible for customizing the container’s behavior.

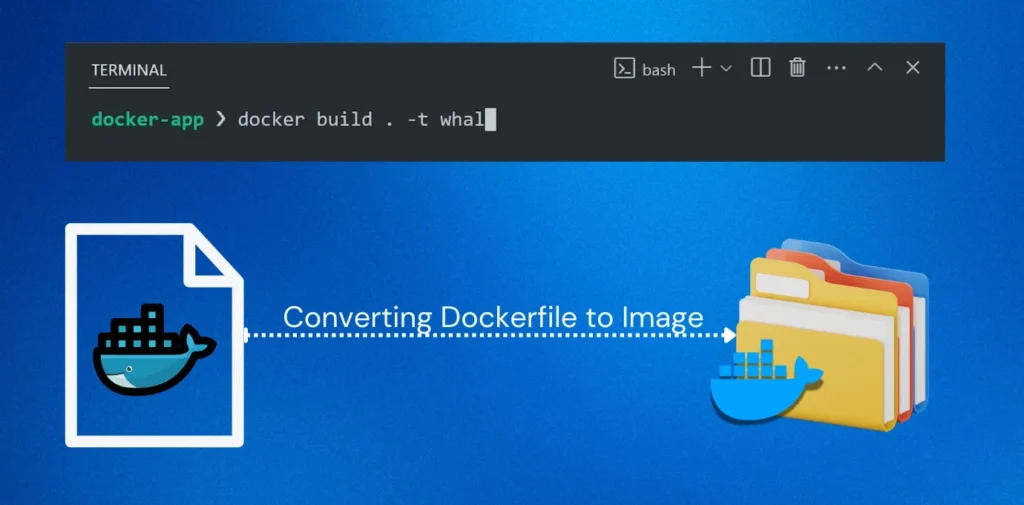

2. Building the Docker Image

A Docker file is used to create an image that consists of an OS, dependencies and code. This image acts as a blueprint for executing your application and can be shared worldwide by uploading it to cloud services such as Docker Hub.

Think of Docker Hub as a vast recipe book for programmers. Once uploaded there, then these images become easily available to public who may wish to use them in their projects as well. This encourages cooperation among different development teams working on similar functionalities or sometimes on different thereby simplifying deployment processes globally.

3. Running the Docker Container

Now we have explored the concept of Docker images which are the blueprints for your applications. But how do you actually use them? Here’s the Entry of the container, the magic ingredient that brings your image to life. Imagine a container as a kitchen where you follow the instructions (Docker image) to cook your application. It is a self-contained unit with everything it needs to run including the OS, dependencies and your code. However, unlike a real kitchen, containers are:

Scaling Your Docker Applications

When you are trying to run multiple containers, you will find that most applications require more than one service. This means that tools such as Docker Compose become necessary for use as it enables you to define many programs and their images within one YAML file hence allowing simultaneous spinning up of all the containers.

When your application grows to an immense level, it is possible that you will have to consider a system like Kubernetes because it comes with a control plane which offer API for managing a group of nodes each of them with multiple pods.

Kubernetes, a pod is the least unit that can be brought up and it may have one or more containers in it. This platform has the capability to scale your infrastructure automatically while at the same time healing all the failures thus making it a perfect tool for complicated high-traffic systems.

Conclusion:

With Docker and Kubernetes, software development is now in a new world: containerization. This isolates applications and dependencies with an innovative yet effective technique that finally puts an end to the age-old problem of ‘it works on my machine.’ Systems struggling to scale because of their structure are no more. Everybody- experienced programmers or beginners who are just starting out can create, as well as launch powerful scalable apps capable of withstanding changes brought about by different software environments.